C# : Exploring the ConcurrentQueue Class in .NET

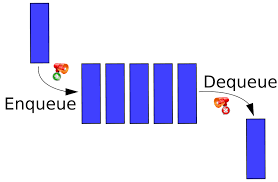

The ConcurrentQueue<T> class in .NET C# provides a thread-safe implementation of a FIFO (First-In-First-Out) queue, allowing multiple threads to add and remove items concurrently without the need for explicit locking. Let's explore the features, usage, and benefits of the ConcurrentQueue class:

Features of ConcurrentQueue:

Thread-Safe Operations: ConcurrentQueue ensures that its operations, such as Enqueue (adding an item) and Dequeue (removing an item), are thread-safe, allowing multiple threads to access the queue concurrently without risk of data corruption or race conditions.

Non-Blocking Operations: Unlike traditional queue implementations that require locking mechanisms to synchronize access, ConcurrentQueue uses lock-free algorithms internally, enabling efficient and non-blocking operations even under heavy concurrent access.

Optimized for Concurrent Access: ConcurrentQueue is designed for scenarios where multiple threads need to add and remove items from the queue simultaneously. It optimizes performance by minimizing contention and maximizing throughput in multi-threaded environments.

Supports Enumeration: ConcurrentQueue supports enumeration, allowing you to iterate over its elements safely even while other threads are modifying the queue concurrently. However, the enumeration may not represent the most up-to-date state of the queue due to concurrent modifications.

UseCases :

The ConcurrentQueue<T> class in .NET C# is particularly useful in scenarios where multiple threads need to interact with a shared queue concurrently. Here are some common use cases for ConcurrentQueue:

Producer-Consumer Pattern: In a producer-consumer scenario, multiple producer threads add items to a queue, while multiple consumer threads dequeue and process these items concurrently.

ConcurrentQueueprovides a thread-safe mechanism for coordinating communication between producers and consumers without the need for manual synchronization.Parallel Task Processing: In parallel programming, tasks may generate intermediate results that need to be processed asynchronously.

ConcurrentQueueallows tasks to enqueue their results for further processing by other threads in a concurrent and efficient manner, enabling scalable parallel processing workflows.Event Handling: In event-driven architectures, multiple event handlers may need to process incoming events concurrently.

ConcurrentQueuecan be used to buffer incoming events and distribute them to event handlers in a thread-safe manner, ensuring that event processing remains responsive and scalable under heavy loads.Workload Distribution: In distributed systems or multi-node architectures, workload distribution often involves queuing tasks or messages for processing by remote workers or nodes.

ConcurrentQueueprovides a centralized and thread-safe queue for distributing workload across multiple processing nodes, facilitating efficient task scheduling and load balancing.Task Synchronization:

ConcurrentQueuecan also be used for synchronizing tasks and coordinating their execution in concurrent workflows. For example, one thread may enqueue tasks for execution, while other threads dequeue and execute these tasks in parallel, ensuring that tasks are processed in a coordinated and synchronized manner.Message Passing: In message-passing systems or inter-process communication (IPC) scenarios,

ConcurrentQueuecan serve as a communication channel for exchanging messages between different components or processes. Messages can be enqueued by one thread or process and dequeued and processed by another thread or process, providing a reliable and thread-safe mechanism for message passing.

Usage of ConcurrentQueue:

Creating a ConcurrentQueue:

using System.Collections.Concurrent;

// Create a new ConcurrentQueue instance

ConcurrentQueue<int> queue = new ConcurrentQueue<int>();

Enqueuing and Dequeuing Items:

// Enqueue items

queue.Enqueue(10);

queue.Enqueue(20);

// Dequeue items

if (queue.TryDequeue(out int item))

{

Console.WriteLine("Dequeued item: " + item);

}

Peeking at the Front Item:

// Peek at the front item without dequeuing

if (queue.TryPeek(out int frontItem))

{

Console.WriteLine("Front item: " + frontItem);

}

Checking Queue Size:

// Get the count of items in the queue

int count = queue.Count;

Console.WriteLine("Queue size: " + count);

Benefits of ConcurrentQueue:

Thread Safety: ConcurrentQueue ensures safe concurrent access to its elements, eliminating the need for explicit locking mechanisms and reducing the risk of deadlocks and race conditions in multi-threaded scenarios.

Scalability: ConcurrentQueue is designed for high concurrency scenarios, making it suitable for applications with heavy multi-threaded workload where performance and scalability are critical.

Simplified Code: By providing built-in thread safety, ConcurrentQueue simplifies the implementation of multi-threaded algorithms and data structures, leading to cleaner and more maintainable code.

Optimized Performance: ConcurrentQueue uses efficient lock-free algorithms and optimizations to maximize throughput and minimize contention, resulting in better overall performance in concurrent environments.

Conclusion:

The ConcurrentQueue<T> class in .NET C# is a powerful tool for building scalable and thread-safe applications that require concurrent access to a FIFO queue. By providing efficient and non-blocking operations, ConcurrentQueue simplifies the implementation of multi-threaded scenarios while ensuring high performance and reliability. Understanding its features, usage, and benefits is essential for leveraging its full potential in concurrent programming tasks.